The Push for Global Regulation, and Ethics vs. Power

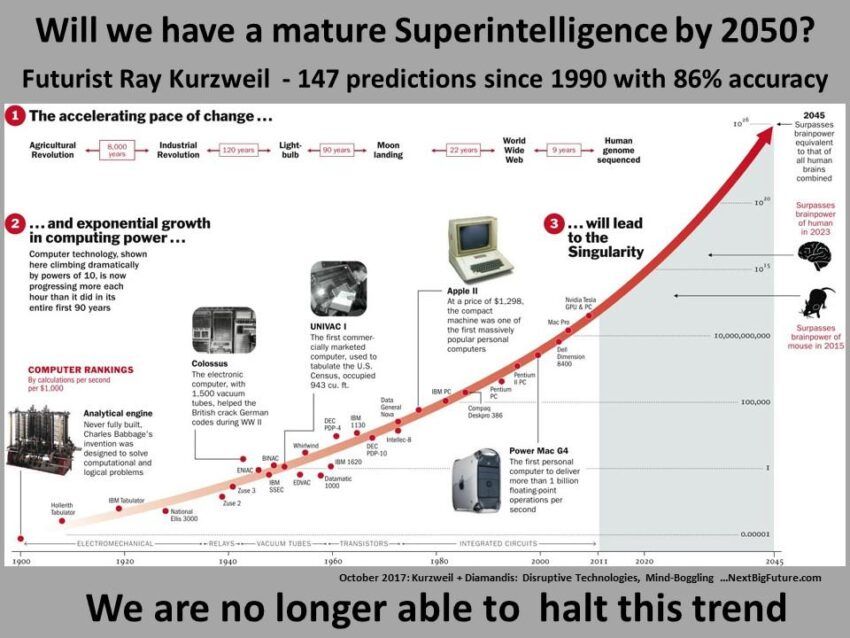

Recently, a collective outcry from OpenAI’s most respected figures has echoed through the field of artificial intelligence. Chief Executive Officer Sam Altman, President Greg Brockman, and Chief Scientist Ilya Sutskever voiced their shared concern regarding the impending arrival of superintelligent AI. In a comprehensive blog post, they offered a picture of the future that left no doubt as to their worries.

Delving into the concerns of these AI pioneers offers us a sobering glimpse into a potentially tumultuous future. This impending era, charged with the electrifying promise and peril of superintelligent AI, is drawing closer every day. As we proceed to unpack their arguments and decipher the true intentions behind their public plea, one thing is clear – the stakes are incredibly high.

A Cry for Global Governance of Superintelligent AI

Indeed, their stark portrayal of a world on the precipice of an AI revolution is chilling. Yet, they propose a solution that is equally bold, advocating for robust global governance. While their words bear weight given their collective experience and expertise, it also raises questions, igniting a debate over the underpinnings of their plea.

The balance of control and innovation is a tightrope walked by many sectors, but perhaps none as precarious as AI. With the potential to restructure societies and economies, the call for governance carries a sense of urgency. Yet, the rhetoric employed and the motivation behind such a call warrant scrutiny.

The IAEA-Inspired Proposal: Inspections and Enforcement

Their blueprint for such an oversight body, modeled on the International Atomic Energy Agency (IAEA), is ambitious. An organization with the authority to conduct inspections, enforce safety standards, carry out compliance testing, and enact security restrictions would undeniably exert considerable power.

This proposal, while seemingly sensible, puts forth a robust structure of control. It paints a picture of a highly regulated environment, which, while ensuring the safe progression of AI, may also give rise to questions about potential overreach.

Aligning Superintelligence with Human Intentions: The Safety Challenge

OpenAI’s team is candid about the herculean challenge ahead. Superintelligence, a concept once confined to the realm of science fiction, is now a reality we grapple with. The task of aligning this powerful force with human intentions is fraught with hurdles.

The question of how to regulate without stifling innovation is a paradox they acknowledge. It’s a balancing act they must master to safeguard humanity’s future. Still, their stance has raised eyebrows, with some critics suggesting an ulterior motive.

Conflicting Interests or Benevolent Guardianship?

Critics contend that Altman’s fervent push for stringent regulation could be serving a dual purpose. Could the safeguarding of humanity be a screen for an underlying desire to stifle competitors? The theory might seem cynical, but it has ignited a conversation around the subject.

The Curious Case of Altman vs. Musk

The rumor mill has produced a narrative suggesting a personal rivalry between Altman and Elon Musk, the maverick CEO of Tesla, SpaceX and Twitter. There is speculation that this call for heavy regulation might be driven by a desire to undermine Musk’s ambitious AI endeavors.

Whether these suspicions hold water is unclear, but they contribute to the overall narrative of potential conflicts of interest. Altman’s dual roles as CEO of OpenAI and as an advocate for global regulation are under scrutiny.

OpenAI’s Monopoly Aspirations: A Trojan Horse?

Furthermore, critics wonder if OpenAI’s call for regulation masks a more Machiavellian objective. Could the prospect of a global regulatory body serve as a Trojan horse, allowing OpenAI to solidify its control over the development of superintelligent AI? The possibility that such regulation might enable OpenAI to monopolize this burgeoning field is disconcerting.

Walking the Tightrope: Can Altman Navigate Conflicts of Interest?

Sam Altman’s ability to successfully straddle his roles is a subject of intense debate. It’s no secret that the dual hats of CEO of OpenAI and advocate for global regulation pose potential conflicts. Can he push for policy and regulation, while simultaneously spearheading an organization at the forefront of the technology he seeks to control?

This dichotomy doesn’t sit well with some observers. Altman, with his influential position, stands to shape the AI landscape. Yet, he also has a vested interest in OpenAI’s success. This duality could cloud decision-making, potentially leading to biased policies favoring OpenAI. The potential for self-serving behavior in this situation presents an ethical quandary.

The Threat of Stifling Innovation

While OpenAI’s call for stringent regulation aims to ensure safety, there’s a risk it might hinder progress. Many fear that heavy-handed regulation could stifle innovation. Others worry it could create barriers to entry, discouraging startups and consolidating power in the hands of a few players.

OpenAI, as a leading entity in AI, could benefit from such a scenario. Therefore, the intentions behind Altman’s passionate call for regulation come under intense scrutiny. His critics are quick to point out the benefits that OpenAI stands to gain.

In the Pursuit of Ethical Governance

In the backdrop of these suspicions and criticisms, the pursuit of ethical AI governance continues. OpenAI’s call for regulation has indeed spurred a necessary conversation. AI’s integration into society necessitates caution, and regulation may provide a safety net. The challenge is ensuring that this protective measure doesn’t transform into a tool for monopolization.

A Convergence of Power and Ethics: The AI Dilemma

The AI field finds itself at a crossroads, a junction where power, ethics, and innovation collide. OpenAI’s call for global regulation has sparked a lively debate, underscoring the intricate balance between safety, innovation, and self-interest.

Altman, with his influential position, is both the torchbearer and a participant in the race. Will the vision of a regulated AI landscape ensure humanity’s safety, or is it a clever ploy to edge out competitors? As the narrative unfolds, the world will be watching.

Disclaimer

Following the Trust Project guidelines, this feature article presents opinions and perspectives from industry experts or individuals. BeInCrypto is dedicated to transparent reporting, but the views expressed in this article do not necessarily reflect those of BeInCrypto or its staff. Readers should verify information independently and consult with a professional before making decisions based on this content.